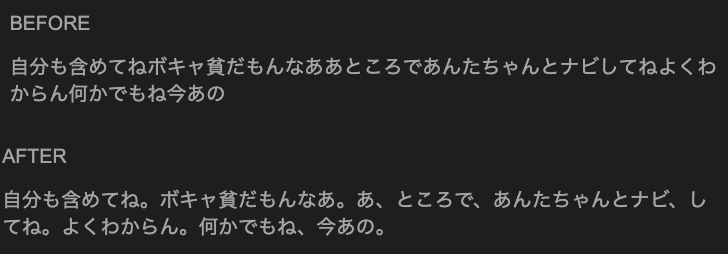

Punctuating passages is a difficult task for Neural Network. The task gets even more challenging when it is a spoken transcript in a language like Japanese. Unlike English, There is no space between words for Japanese.

To oversimplify the task, these are the 3 main steps required foe this task. I got the best results using BiLSTM CRF model.

We first need to tokenize the words using a Tokenizer.

Then design the Input Sequence with relevant context.

Do some feature Engineering and make the prediction.